In most enterprise environments, high availability (HA) is typically treated as a device-level issue: dual power supplies, redundant fan trays, warm spares, HSRP/VRRP configurations, ISSU, NSR, or NSF, among other measures, and possibly a redundant switch stack or clusters of routers. That may be sufficient when your failure domain is a branch router pushing 1–10 Gbps.

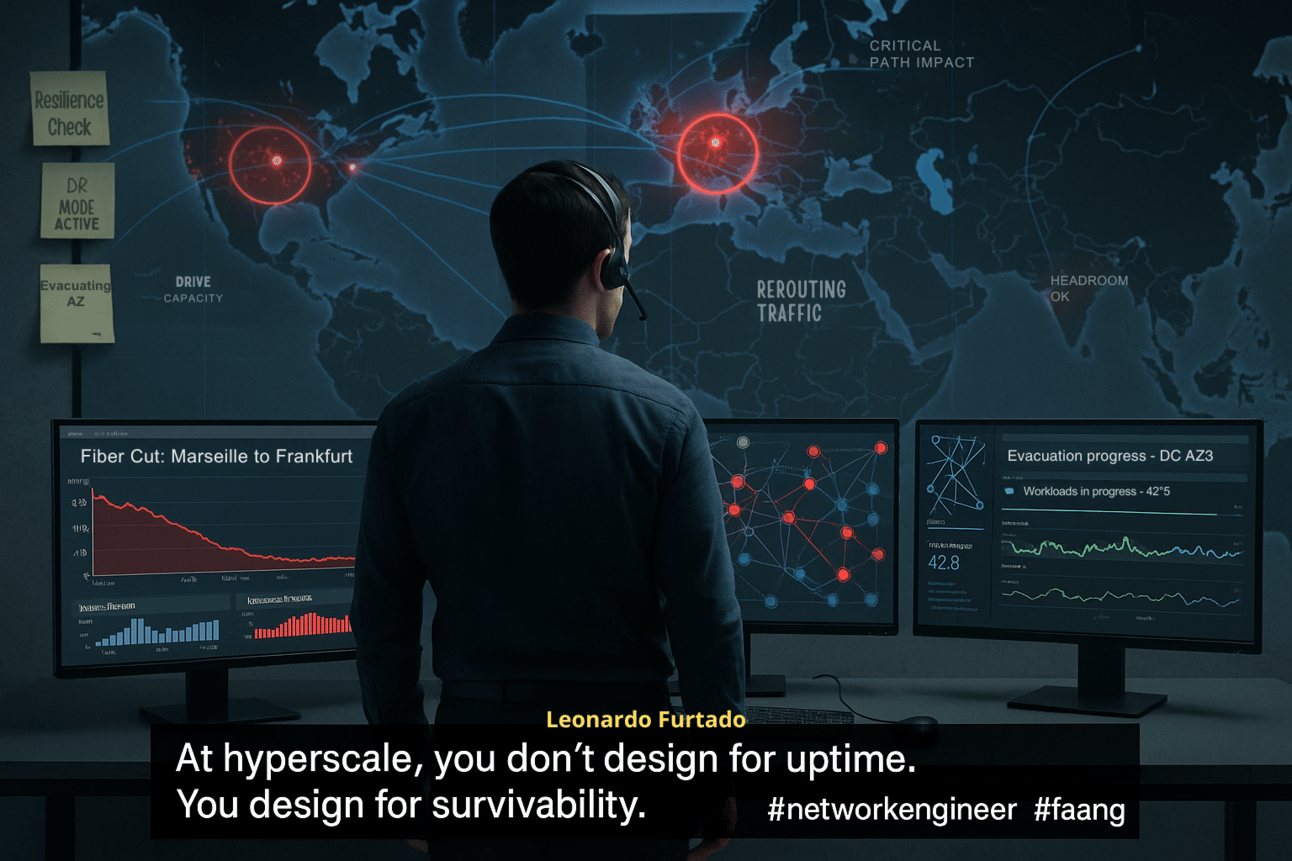

But when you’re operating at hyperscale, where a single link carries way too much traffic per second, and a datacenter aggregates traffic volumes larger than entire countries, this mindset breaks.

Because at this scale, failures are not isolated events.

They’re systemic.

They’re fast.

And they’re massively consequential.

The “Illusion” of Device-Level HA

Traditional networking design teaches us that “if one device fails, we should have another ready.” It also says that “if you have two devices, you have one. But if you only have one, you're back to square one.” That's why we create redundant paths, dual-supervisor line cards, and redundant devices at the same role, tier, or layer, and use heartbeat protocols like BFD to quickly detect faults. We also rely on other approaches with high availability features and services.

But here’s the harsh reality in hyperscale:

Devices don’t fail in isolation.

Failures happen at multiple layers at once: optical, power, software, and orchestration.

Failures often originate outside your datacenter: submarine cables, metro fiber splices, upstream transport collapses.

Redundancy is not resiliency.

Redundancy is about backup. Resiliency is about survivability at scale.

Real-World Failure Domains We Design For

Let’s explore the kinds of large-scale failure events we must plan for in hyperscale networks.

1. Multi-Terabit Fiber Cuts

Scenario: A transatlantic cable is severed by a fishing trawler.

Impact: 2–4 Tbps of live traffic rerouted, immediately.

Challenge: Can your network absorb that without cascading congestion?

Design Implication: A capacity headroom of 30–50% is insurance against unforeseen events. Path diversity at the fiber level is essential.

2. DWDM/Optical Layer Failures

Scenario: A faulty amplifier or vendor-specific bug causes an entire wavelength bundle to drop.

Impact: Hundreds of 100G or 400G circuits disappear at once.

Challenge: Optical control planes don’t always signal clearly to IP/MPLS layers. Failover must be multi-layer aware.

Design Implication: Build cross-layer telemetry (optical + packet). Design fast reroute (FRR) policies that kick in before the IP fabric notices the drop.

3. Rack, Row, or Datacenter Failures

Scenario: Cooling failure triggers thermal shutdown in 200+ racks.

Impact: Partial fabric collapse; control plane desynchronization.

Challenge: Spine-leaf designs collapse under asymmetry if not built with containment zones.

Design Implication: Ensure routing is distributed and stateless enough to evacuate traffic before total failure. Use zone-aware traffic engineering.

4. Global Configuration or Software Faults

Scenario: An automated config change mislabels BGP communities across a vendor fleet.

Impact: Prefix leaks, route suppression, or control plane churn.

Challenge: The blast radius isn’t one box; it’s every box that shares that agent or template.

Design Implication: Enforce strict blast radius boundaries at automation, code deployment, and control plane layers. Implement CI/CD with staged rollout, rollback paths, and health check validation at every hop.

Engineering Principles That Actually Work

To survive these classes of failure, we must engineer for system-level resilience, not just node survivability.

1. Design for Failure Domains by Default

Building for scale requires designing for containment, structuring your network in such a way that failures remain localized and don’t ripple across the entire infrastructure.

That’s the core idea behind failure domains. Instead of treating the network as a flat, homogenous fabric, we must introduce deliberate segmentation: Availability Zones, metro pods, and edge clusters with strict boundaries. These boundaries encompass physical, logical, operational, and policy-driven aspects.

By ensuring that automation, software updates, and control plane behaviors are scoped within well-defined zones, we can drastically reduce the blast radius of misconfigurations, bugs, or localized hardware failures.

When updates or orchestration systems mistakenly propagate across AZs, the consequences often escalate from a minor incident to a regional or even global outage. Designing with failure domains as a first-class primitive forces discipline into your architecture. It ensures resilience is not just a reactive recovery strategy, but a proactive design principle embedded in how the system behaves under both normal and exceptional conditions.

So, I strongly suggest that you:

Define Availability Zones (AZs), edge clusters, and metro pods, which have hard logical and physical boundaries.

Prevent any automation system or control plane update from crossing AZ boundaries unless explicitly staged and validated (although it is still highly non-recommended to update across AZs).

Subscribe to our premium content to read the rest.

Become a paying subscriber to get access to this post and other subscriber-only content. No fluff. No marketing slides. Just real engineering, deep insights, and the career momentum you’ve been looking for.

UpgradeA subscription gets you:

- ✅ Exclusive career tools and job prep guidance

- ✅ Unfiltered breakdowns of protocols, automation, and architecture

- ✅ Real-world lab scenarios and how to solve them

- ✅ Hands-on deep dives with annotated configs and diagrams

- ✅ Priority AMA access — ask me anything